A rarely used legacy misfeature of the main Internet email protocol creeps back from irrelevance as a minor annoyance. You should ask your mail and antispam provider about their approach to 'SMTP callbacks'. Be wary of any assertion that is not backed by evidence.

Even if you are an IT professional and run an email system, you could be forgiven for not being immediately aware that there is such a thing as SMTP callbacks, also referred to as callback verification. As you will see from the Wikipedia article, the feature was never widely adopted, and for all too understandable reasons.

If you do run a mail system, you have probably heard about that feature's predecessor, the still-required but rarely used SMTP VRFY and EXPN commands. Those commands offer a way to verify whether an address is valid and to show the component addresses that a mailing list resolves to, respectively.

Back when all things inter-networking were considered experimental and it was generally thought that information should flow freely in and between those experimental networks, it was quite common for mail servers to offer VRFY and EXPN service to all comers.

I'm old enough to remember using VRFY by hand, telnet-ing to port 25 on a mail server and running VRFY $user@$domain.$tld commands to check whether an email address was indeed valid. I've forgotten which domains and persons were involved, but I imagine the reason why was that I wanted to contact somebody who had said something interesting in a post to a USENET news group.

But networkers trying to make contact with each other were not the only ones who discovered the VRFY and EXPN commands. Soon spammers were using those commands to actively harvest actually! valid! deliverable! addresses, and by 1999 the RFC2505 best practices document recommended disabling the features altogether. After all, there would usually be some other way available to find somebody's email address (there was even a FAQ, a longish Frequently Asked Questions document with apparent USENET origins written and maintained on the subject, a copy of which can be found here).

In roughly the same time frame, somebody came up with the idea of SMTP callbacks. The idea was that all domains on the Internet need to publish the address of their mail exchangers via DNS MX (mail exchanger) records. The logical next step is then that when a piece of mail arrives over SMTP, the receiving end should be able to contact the sender domain's known mail exchanger to check that the sender address is indeed valid. If you by now hear the echoes of VRFY and EXPN, you're right. There are indications that some early implementations did in fact use VRFY for that purpose.

But then the world changed, and you could not rely on VRFY being available in the post-RFC2505 world.

In the post-RFC2505 world, the other side would most likely not offer up any useful information in response to VRFY commands, and you would most likely be limited to the short interchange that the Wikipedia entry quotes,

HELO <verifier host name>

MAIL FROM:<>

RCPT TO:<the address to be tested>

QUIT

which a perceptive reader would identify as only verifying in a very limited sense that the domain's mail exchanger was indeed equipped with a functional SMTP service.

It is worth noting, as many have over the years, that the MX records only specify where a domain expects to receive mail, not where valid mail from the domain is supposed to originate. Several mechanisms to help identify valid mail senders for a domain have been devised in the intervening years, but none existed at the time SMTP callbacks were considered even remotely useful.

For reasons that are not entirely clear, some developers kept working on SMTP callback code and several mail server implementations available today (2017) still contain code that looks like it was intended to support information-rich callbacks, if the system was configured to enable the feature at all. The default configurations in general do not enable the SMTP callback feature, and mail admins rarely bother to even learn much about the largely disused and (in my opinion at least) not too well thought out feature.

This all happened back in the 1990s, but quite recently an incident occurred that indicates that in some pockets of the Internet, SMTP callbacks are still in use, and in at least some cases data from the callbacks are used for generating blacklists and block mail delivery. The last part should raise a few eyebrows at least.

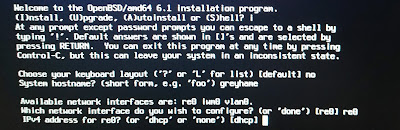

Jumping forward from the distant 1990s to the present day, regular readers of this column will be aware that bsdly.net and cooperating domains run SMTP service with OpenBSD spamd(8) doing greylisting service, and that the spamd(8) setup produces a greytrapping-based blacklist which is available for download, dumped to a file (available here and here) once per hour.

Maintaining the mail system and the blacklist also involves keeping an eye on mail-related activities, and invalid addresses in our domains that turn up in the greylist are usually added to the list of spamtrap addresses within not too many hours after they first appear. The process of actually adding spamtrap addresses is a manual one, but based on the output of pathetically simple shell scripts that run as cron jobs.

The list of spamtraps has grown over the years to more than 38 000 entries. Most of the entries have local parts that are pure generated gibberish, some entries are probably degraded versions of earlier spamtrap addresses and some again seem to conform with specific patterns, including but not limited to SMTP or NNTP message IDs.

On August 19th and 20th 2017 I noticed a different, but yet familiar pattern in some of the new entries.

The entry that caught my eye had the MAIL FROM: part as

mx42.antispamcloud.com-1503146097-testing@bsdly.com

The local part pattern was somewhat familiar, and breaks down to

$localhostname-$epochtime-testing

with @targetdomain.$tld (in our case, bsdly.com) appended. I had at this point totally forgotten about SMTP callbacks, but I decided to check the logs for any traces of activity involving that host. The only trace I could find in the logs was at the spamd-serving firewall in front of the bsdly.com domain's secondary mail exchanger:

Aug 19 14:35:27 delilah spamd[26915]: 207.244.64.181: connected (25/24)

Aug 19 14:35:38 delilah spamd[26915]: (GREY) 207.244.64.181: <> -> <mx42.antispamcloud.com-1503146097-testing@bsdly.com>

Aug 19 14:35:38 delilah spamd[15291]: new entry 207.244.64.181 from <> to <mx42.antispamcloud.com-1503146097-testing@bsdly.com>, helo mx18-12.smtp.antispamcloud.com

Aug 19 14:35:38 delilah spamd[26915]: 207.244.64.181: disconnected after 11 seconds.

Essentially a normal first contact: spamd at our end answers slowly, one byte per second, but the greylist entry is created in the expectation that any caller with a valid message to deliver would try again within a reasonable time. The spamd synchronization between the hosts in our group of greylisting hosts would see to that an entry matching this sequence would appear in the greylist on all participating hosts.

But the retry never happened, and even if it had, that particular local-part would anyway have produced an "Unknown user" bounce. But at that point I decided to do a bit of investigation and dug out what seemed to be a reasonable point of contact for the antispamcloud.com domain and sent an email with a question about the activity.

That message bounced, with the following explanation in the bounce message body:

But the retry never happened, and even if it had, that particular local-part would anyway have produced an "Unknown user" bounce. But at that point I decided to do a bit of investigation and dug out what seemed to be a reasonable point of contact for the antispamcloud.com domain and sent an email with a question about the activity.

That message bounced, with the following explanation in the bounce message body:

DOMAINS@ANTISPAMCLOUD.COM

host filter10.antispamcloud.com [31.204.155.103]

SMTP error from remote mail server after end of data:

550 The sending IP (213.187.179.198) is listed on https://spamrl.com as a source of dictionary attacks.

As you have probably guessed, 213.187.179.198 is the IPv4 address of the primary mail exchanger for bsdly.net, bsdly.com and a few other domains under my care.

If you go to the URL quoted in the bounce, you will notice that the only point of contact is via an email adress in an unrelated domain.

I did fire off a message to that address from an alternate site, but before the answer to that one arrived, I had managed to contact another of their customers and got confirmation that they were indeed running with an exim setup that used SMTP callbacks.

The spamrl.com web site states clearly that they will not supply any evidence in support of their decision to blacklist. Somebody claiming to represent spamrl.com did respond to my message, but as could be expected from their published policy was not willing to supply any evidence to support the claim stated in the bounce.

In my last message to spamrl.com before starting to write this piece, I advised

I remain unconvinced that the description of that problem is accurate, but investigation at this end can not proceed without at least some supporting evidence such as times of incidents, addresses or even networks affected.

If there is a problem at this end, it will be fixed. But that will not happen as a result of handwaving and insults. Actual evidence to support further investigation is needed.

Until verifiable evidence of some sort materializes, I will assume that your end is misinterpreting normal greylisting behavior or acting on unfounded or low-quality reports from less than competent sources.

The domain bsdly.com was one I registered some years back mainly to fend off somebody who offered to help the owner of the bsdly.net domain acquire the very similar bsdly.com domain at the price of a mere few hundred dollars.

My response was to spend something like ten dollars (or was it twenty?) to register the domain via my regular registrar. I may even have sent back a reply about trying to sell me what I already owned, but I have not bothered to dig that far back into my archives.

The domain does receive mail, but is otherwise not actively used. However, as the list of spamtraps can attest (the full list does not display in a regular browser, since some of the traps are interpreted as html tags, if you want to see it all, fetch the text file instead), others have at times tried to pass off something or other with from addresses in that domain.

But with the knowledge that this outfit's customers are believers in SMTP callbacks as a way to identify spam, here is my hypothesis on what actually happened:

On August 19th 2017, my greylist scanner identified the following new entries referencing the bsdly.com domain:

anecowuutp@bsdly.com

pkgreewaa@bsdly.com

eemioiyv@bsdly.com

keerheior@bsdly.com

mx42.antispamcloud.com-1503146097-testing@bsdly.com

vbehmonmin@bsdly.com

euiosvob@bsdly.com

otjllo@bsdly.com

akuolsymwt@bsdly.com

I'll go out on a limb and guess that mx42.antispamcloud.com was contacted by any of the roughly 5000 hosts blacklisted at bsdly.net at the time, with an attempt to deliver a message with a MAIL FROM: of either anecowuutp@bsdly.com, pkgreewaa@bsdly.com, eemioiyv@bsdly.com or perhaps most likely keerheior@bsdly.com, which appears as a bounce-to address in the same hourly greylist dump where mx42.antispamcloud.com-1503146097-testing@bsdly.com first appears as a To: address.

The first seen time in epoch notation for keerheior@bsdly.com is

1503143365

which translates via date -r to

Sat Aug 19 13:49:25 CEST 2017

while mx42.antispamcloud.com-1503146097-testing@bsdly.com is first seen here at epoch 1503146138, which translates to Sat Aug 19 14:35:38 CEST 2017.

The data indicate that this initial (and only) attempt to contact was aimed at the bsdly.com domain's secondary mail exchanger, and was intercepted by the greylisting spamd that sits in the incoming signal path to there. The other epoch-tagged callbacks follow the same pattern, as can be seen from the data preserved here.

Whatever action or address triggered the callback, the callback appears to have followed the familiar script:

- register attempt to deliver mail

- look up the domain stated in the MAIL FROM: or perhaps even the HELO or EHLO

- contact the domain's mail exchangers with the rump SMTP dialog quoted earlier

- with no confirmation or otherwise of anything other than the fact that the domain's mail exchangers do listen on the expected port, proceed to whatever the next step is.

The known facts at this point are:

- a mail system that is set up for SMTP callbacks received a request to deliver mail from keerheior@bsdly.com

- the primary mail exchanger for bsdly.com has the IPv4 address 213.187.179.198

Both of these are likely true. The second we know for sure, and the first is quite likely. What is missing here is any consideration of where the request to deliver came from.

From the data we have here, we do not have any indication of what host contacted the system that initiated the callback. In a modern configuration, it is reasonable to expect that a receiving system checks for sender validity via any SPF, DKIM or DMARC records available, or for that matter, greylist and wait for the next attempt (in fact, greylisting before performing any other checks - as an OpenBSD spamd(8) setup would do by default - is likely to be the least resource intensive approach).

We have no indication that the system performing the SMTP callout used any such mechanism to find an indication as to whether the communication partner was in fact in any way connected to the domain it was trying to deliver mail for.

We have no indication that the system performing the SMTP callout used any such mechanism to find an indication as to whether the communication partner was in fact in any way connected to the domain it was trying to deliver mail for.

My hypothesis is that whatever code is running on the SMTP callback adherents' systems does not check the actual sending IP address, but assumes that any message claiming to be from a domain must in fact involve the primary mail exchanger of that domain and since the code likely predates the SPF, DKIM and DMARC specifications by at least a decade, it will not even try to check those types of information. Given the context it is a little odd but perhaps within reason that in all cases we see here, the callback is attempted not to the domain's primary mail exchanger, but the secondary.

With somebody or perhaps even several somebodies generating nonsense addresses in the bsdly.com domain at an appreciable rate (see the record of new spamtraps starting May 20th, 2017, alternate location here) and trying to deliver using those fake From: addresses to somewhere doing SMTP callback, it's not much of a stretch to assume that the code was naive enough to conclude that the purported sender domain's primary mail exchanger was indeed performing a dictionary attack.

The most useful lesson to take home from this sorry affair is likely to be that you need to kill SMTP callback setups in any system where you may find them. In today's environment, SMTP callbacks do not in fact provide useful information that is not available from other public sources, and naive use of results from those lookups is likely to harm unsuspecting third parties.

So,

So,

- If you are involved in selling or operating a system that behaves like the one described here and are in fact generating blacklists based on those very naive assumptions, you need to stop doing so right away.

Your mistaken assumptions help produce bad data which could lead to hard to debug problems for innocent third parties.

Or as we say in the trade, you are part of the problem. - If you are operating a system that does SMTP callbacks but doesn't do much else, you are part of a small problem and likely only inconveniencing yourself and your users.

The fossil record (aka the accumulated collection of spamtrap addresses at bsdly.net) indicates that the callback variant that includes epoch times is rare enough (approximately 100 unique hosts registered over a decade) that callback activity in total volume probably does not rise above the level of random background noise.

There may of course be callback variants that have other characteristics, and if you see a way to identify those from the data we have, I would very much like to hear from you. - If you are a customer of somebody selling antispam products, you have reason to demand an answer to just how, if at all, your antispam supplier utilizes SMTP callbacks. If they think it's a fine and current feature, you have probably been buying snake oil for years.

- If you are the developer or maintainer of mail server code that contains the SMTP callbacks feature, please remove the code. Leaving it disabled by default is not sufficient. Removing the code is the only way to make sure the misfeature will never again be a source of confusing problems.

For some hints on what I consider a reasonable and necessary level of transparency in blacklist maintenance, please see my April 2013 piece Maintaining A Publicly Available Blacklist - Mechanisms And Principles.

The data this article is based on still exists and will be available for further study as long as the request comes wit a reasonable justification. I welcome comments in the comment field or via email (do factor in any possible greylist delay, though).

Any corrections or updates that I find necessary based on your responses will be appended to the article.

The data this article is based on still exists and will be available for further study as long as the request comes wit a reasonable justification. I welcome comments in the comment field or via email (do factor in any possible greylist delay, though).

Any corrections or updates that I find necessary based on your responses will be appended to the article.

Update 2017-09-05: Since the article was originally published, we've seen a handful of further SMTP callback incidents. The last few we've handled by sending the following to the addresses that could be gleaned from whois on the domain name and source IP address (with mx.nxdomain.nx and 192.0.2.74 inserted as placeholders here to protect the ignorant):

Hi,

I see from my greylist dumps that the host identifying as

mx.nxdomain.nx, IP address 192.0.2.74

is performing what looks like SMTP callbacks, with the (non-existent of course) address

mx.nxdomain.nx-1504629949-testing@bsdly.com

as the RCPT TO: address.

It is likely that this activity has been triggered by spam campaigns using made up addresses in one of our little-used domains as from: addresses.

A series of recent incidents here following the same pattern are summarized in the article

http://bsdly.blogspot.com/2017/08/twenty-plus-years-on-smtp-callbacks-are.html

Briefly, the callbacks do not work as you expect. Please read the article and then disable that misfeature. Otherwise you will be complicit in generating false positives for your SMTP blacklist.

If you have any questions or concerns, please let me know.

Yours sincerely,

Peter N. M. Hansteen

Update 2017-11-02: It looks like the spamrl.com service still has customers that believe in the same claim made in the bounce messages quoted earlier in this article and perform SMTP callbacks exactly like they did when this article was first written. If you have any verifiable information on that outfit and their activities, I would very much like to hear from you.